In a world of high-traffic IoT systems, delivering real-time data updates is critical for enhancing user experience. A scalable architecture is essential to manage continuous streams of information from connected devices, be it for smart home applications, industrial monitoring, or connected vehicles. Here, we’ll discuss a general technical approach to building a high-scale, reliable event-streaming platform, using a Connected Vehicle Platform (CVP) as an example.

Connected Vehicle Platform Use Case

We are building a high-scale connected vehicle platform for one of the leading automobile manufacturers. Currently, the platform hosts more than 300k vehicles and has at least 50k active vehicles streaming data to the system on average at any given time.

As part of building the platform, there is a use case of processing a stream of data received from the vehicle and making it available on the user’s mobile in near real-time. Each data packet would be around 500kB to 1MB in size. Depending on the vehicle configuration, it would produce around 1 to 120 such packets based on the sensor data each minute. The mobile application would use this latest vehicle information in multiple places, from showing the latest vehicle information like vehicle running status, odometer readings, seatbelt details, tyre pressure, and much more. In building a high-scale, reliable system, it is critical that no event/message is missed and that they are processed in near real-time.

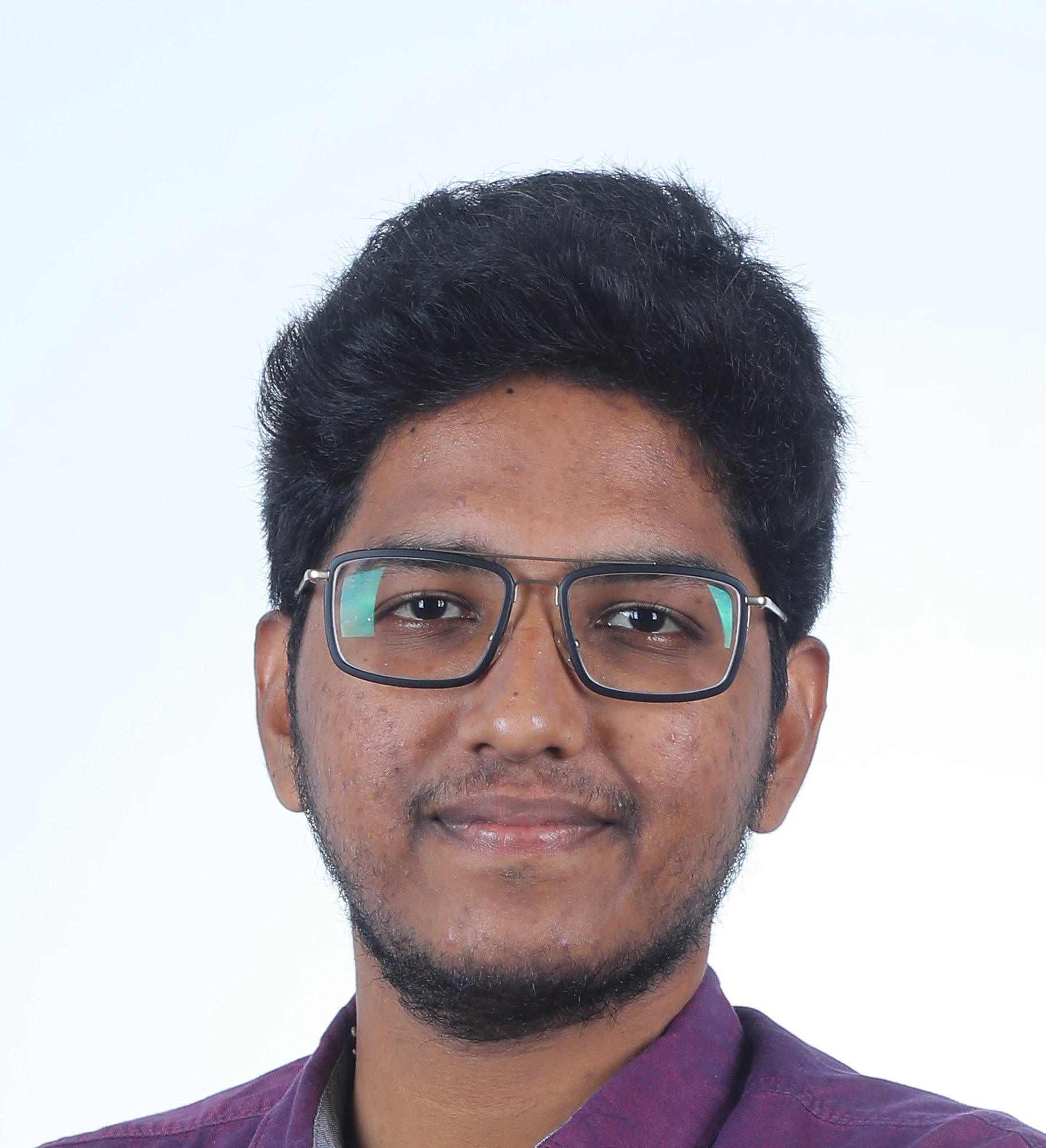

Client Side Polling

Initially, we started with a basic polling strategy which is exposing a REST API to query the latest data sent by each vehicle and the mobile application would poll this endpoint every 1 second. This was when the platform had very few active vehicles and this solution worked well for some years.

Challenges with Scaling Client-side Polling Solution

Given below are some challenges with scaling client-side polling solution:

With more active vehicles, we started seeing latency issues on the API because the database could not handle such load.

Battery optimisation on mobile phones also started throttling the application from doing continuous polling.

Though the solution looks simple and easily maintainable, with more vehicles onboarded, the platform struggled to scale. We decided to move away from client-side polling to server-side push to fix that. There are two prominent options to support server-side push—web sockets and server-side events.

WebSockets: Enable full-duplex, real-time communication between a client and server, allowing data to be sent and received simultaneously, which is ideal for applications like chat or gaming.

Server-Sent Events (SSE): Provide one-way communication from the server to the client over HTTP, suitable for real-time updates like news feeds or notifications, with less complexity than WebSockets.

We went ahead with Server-sent events since our use case doesn’t demand a bidirectional connection. Though the SSE API looks similar to a stateless REST API, scaling them is not an easy task. Now, let us understand the issue with scaling stateful connections like WebSockets or SSE API.

Challenges with Scaling Stateful Connections

Stateless REST APIs can be elastically scaled based on load with a simple load balancer. As long as your database can handle the load, scaling the backend is easier. Whereas in stateful APIs, the client will hold a persistent connection with a backend application, and the backend application will continue streaming new data whenever it is produced.

With more connections added, a single instance of the application will not be able to handle the load. After a point, even vertical scaling (adding more resources) will not help. So, we need to support horizontal scaling.

With horizontal scaling, having more than one replica of the backend application hosting a stateful connection, the data has to be rightly delivered to the replica that holds the connection for a specific client.

Broadcasting the message to all the application replicas would incur more network data transfer costs.

With clients maintaining long-running connections with the application and the application’s business logic code coupled with SSE/WebSocket serving code, rolling out new changes on business logic will terminate the connection held by all clients with the application, and the client has to reinitiate the connection. Though this is a minor dip in user experience, there are better approaches even to avoid this.

Server-side Push using Pushpin

To solve all the above issues, we identified an interesting tool named Pushpin. Here is what is mentioned by Pushpin Authors:

Pushpin is a reverse proxy server written in Rust & C++ that makes it easy to implement WebSocket, HTTP streaming, and HTTP long-polling services. The project is unique among realtime push solutions in that it is designed to address the needs of API creators. Pushpin is transparent to clients and integrates easily into an API stack.

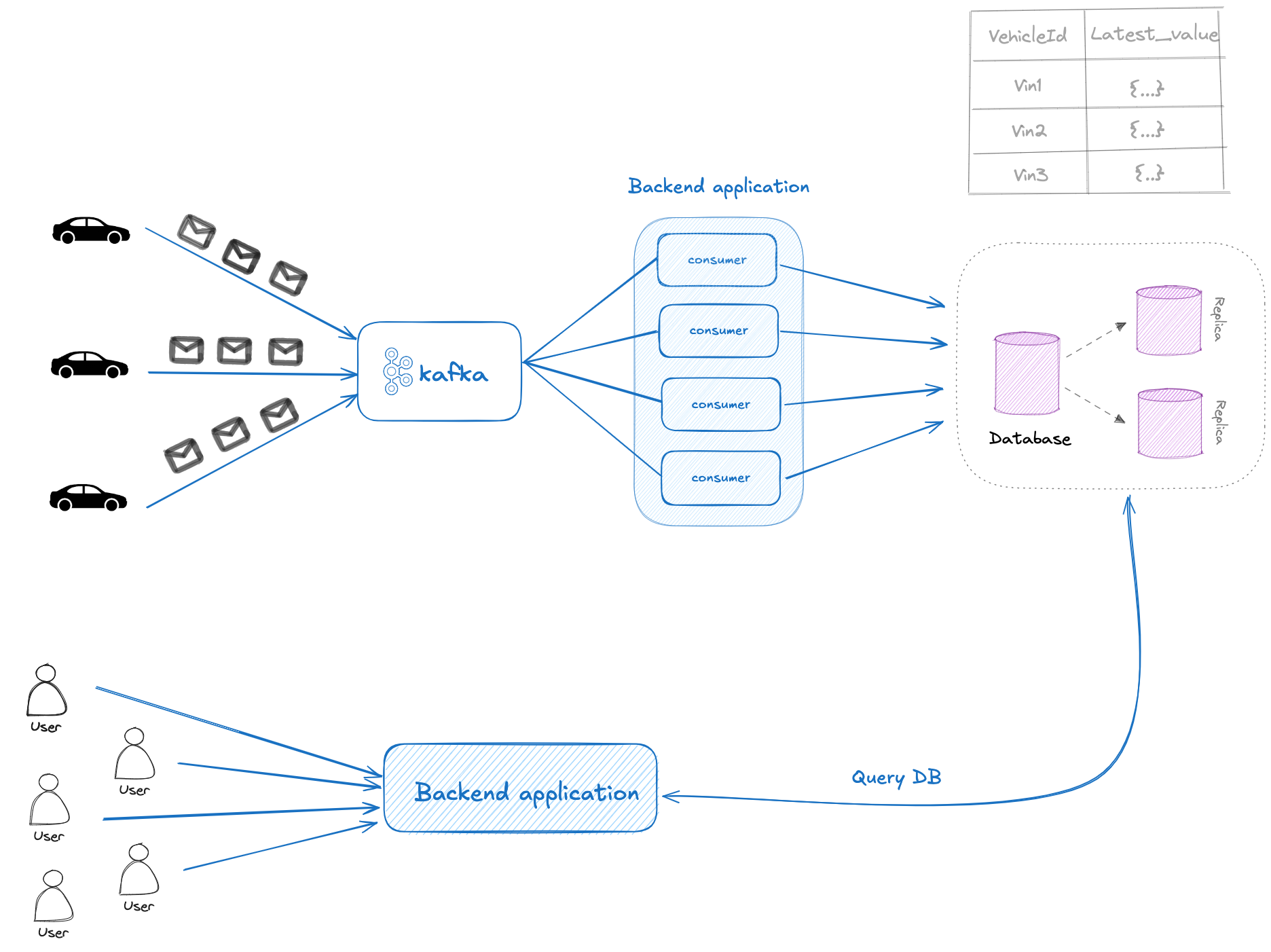

SSE architecture with Pushpin as the Backbone

The vehicle will stream the data to our backend in the configured frequency.

Vehicle data (packets) will be dumped into the message queue for further processing (not in the scope of this blog).

Streaming consumers will read the data from the message queue, enrich the data with some business logic, and broadcast the message to all the Pushpin replicas.

Pushpin will check whether the vehicle data has got any active listeners, if yes, it will forward the packet or else the packet will be dropped.

Data Flow of the New Architecture

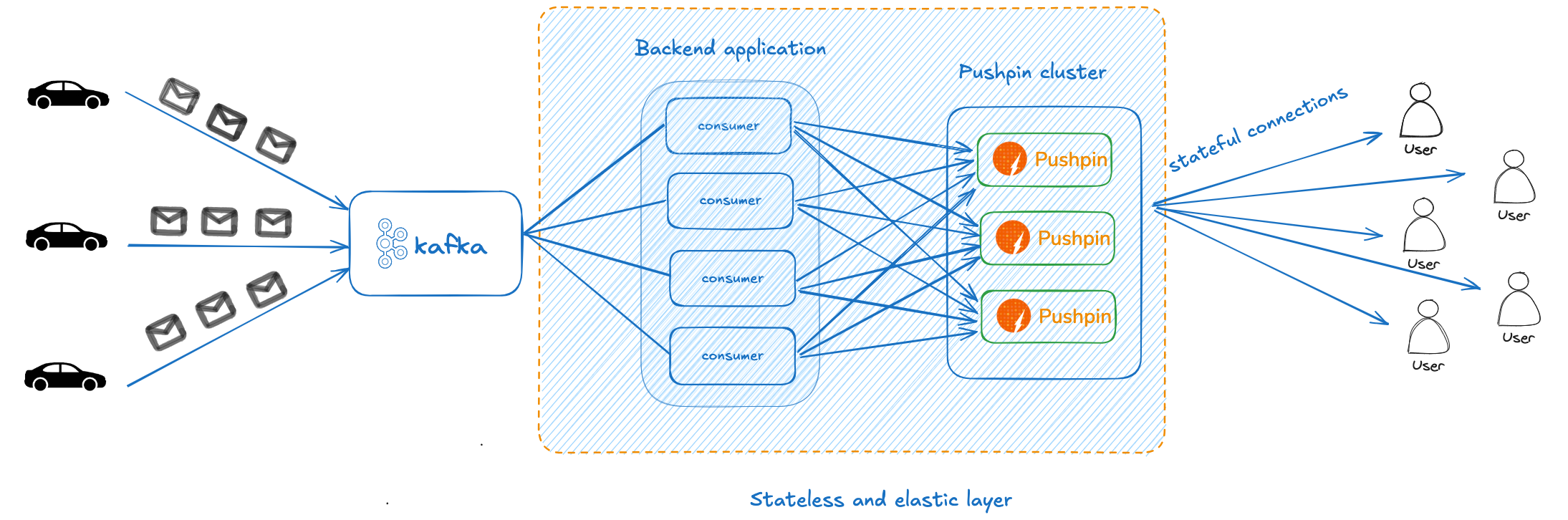

The user will send an HTTP GET request to Pushpin asking for the latest vehicle data.

Pushpin would forward the request to the backend application, acting as a reverse proxy based on the routing configured.

The backend application, upon receiving the request, will query the database to respond with the latest data (similar to how polling works).

In addition, the Backend application will add Grip headers as part of the response to tell the Pushpin to keep the connection open and consider this as an SSE connection with a unique channel ID, which is the vehicle’s internal identifier in our case.

Grip-Hold: stream # To keep the connection open and consider it as Server-side-events.

Grip-Channel: {Vehicle internal identifier} # channel IdPushpin, on receiving Grip headers, will keep the connection open and will add a mapping for channel ID to the connection internally.

On receiving a new message in the message broker, the backend application will broadcast the message to all Pushpin servers through the HTTP POST endpoint exposed by Pushpin.

Upon receiving the new message, Pushpin will check for any mapping for the channel ID. If present, it will forward the message to the connection or else it will ignore the message.

There is only one drawback with the approach, which is broadcasting the same message to all the Pushpin servers resulting in higher network transfer costs. To solve this problem, Pushpin suggests using the ZeroMQ publish protocol, which will forward the subscription information to the producer so that the producer can publish to the right Pushpin server. You can read more about it here.

General Benefits of this Architecture

While illustrated with a connected vehicle platform, this architecture supports any IoT solution that demands real-time data delivery at scale and its benefits include:

Separation of Concerns: Business logic is decoupled from connection management, allowing for independent scaling.

Resource Optimisation: Pushpin can handle millions of stateful connections with minimal resources.

Resiliency: Stateless components simplify scaling and reduce the impact of updates on connected clients.

Conclusion

Transitioning to a server-side push mechanism using Server-Sent Events (SSE) and Pushpin provides a more robust and scalable solution. Overall, this architecture enhances the platform’s ability to handle high-scale data streaming, efficiently delivering real-time updates to the users.

This approach, though implemented here for a Connected Vehicle Platform, can be applied across various IoT systems requiring real-time data streaming.

References:

Pushpin docs: https://pushpin.org/docs/about/

GRIP protocol used by pushpin: https://pushpin.org/docs/protocols/grip/

Scaling web sockets to millions – phoenix project: https://phoenixframework.org/blog/the-road-to-2-million-websocket-connections