Recently I had a challenging problem to solve, where I need to extend a Data Product that serves clients in a single region to clients in another region. Considering different data Laws in different countries I had to provision an entirely new set of infrastructure in the new Region with multiple environments and run the exact same product.

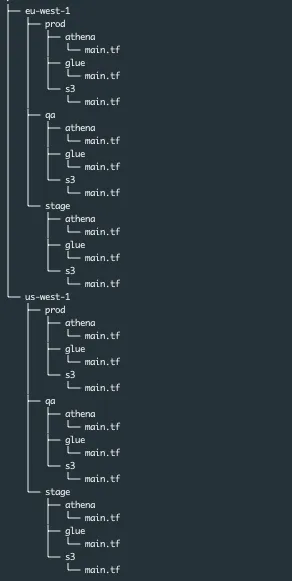

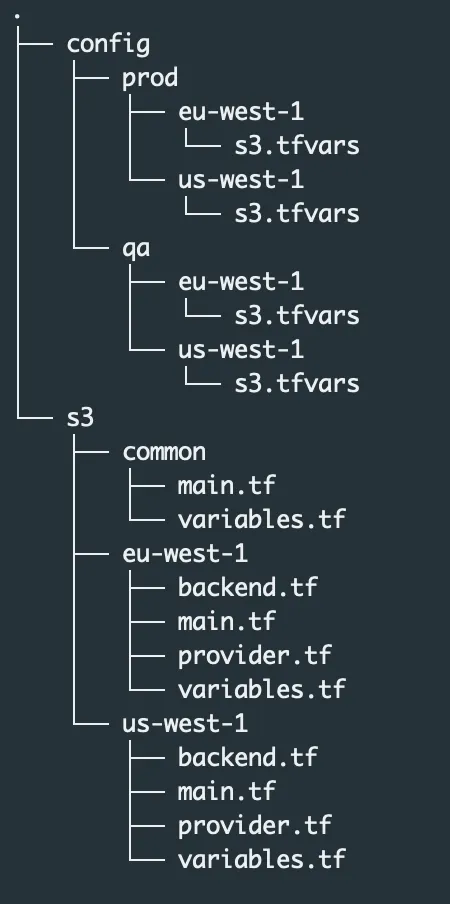

Almost all of the blogs and documentation available online gave partial solutions, it either helped in provisioning infrastructure in multiple regions or multiple environments. A Naive solution to solve both multi-region and multi-environment provisioning will be copy-pasting the code for each environment and region. The structure will look something like this,

One thing I was very clear about when I started to solutionize the problem, the final terraform code should be DRY(Don’t Repeat Yourself). But if you see the above solution it is dripping WET(Write Everything Twice).

Single Region/Multiple Environment Code Structure

I will quickly walkthrough, how the code structure looked before extending it to support multiple regions,

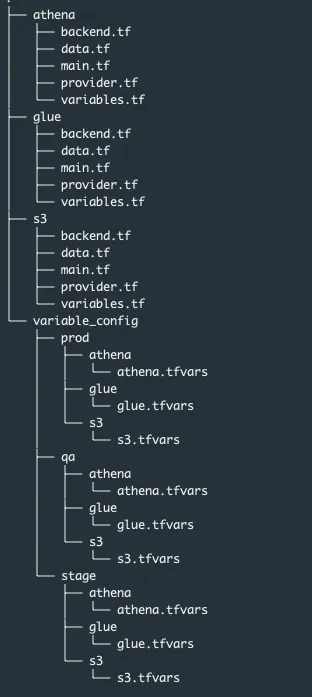

All the different services that AWS provides has been separated out as an individual module. Each module has 5 different files, the main.tf file has all the infrastructure resources that need to be created via code, Data sources in data.tf fetches information about the infrastructure that was either created manually or resources created by other terraform modules. variables.tf has all the variables that are needed by the terraform resources in main.tf and data.tf. These variables are overridden during plan/apply by passing the corresponding tfvars in the command line like

terraform apply --var-file "s3.tfvars"

#Overriding variables of S3 during apply stageSimilarly provider.tf and backend.tf has the corresponding configurations. In my case, AWS was the provider and S3 bucket was my backend.

The above code structure supported creating multiple environments in a single region while doing a terraform plan/apply.

Single Region/Multi Environment Example

Let’s assume we have already created 3 S3 buckets in eu-west-1 AWS region. The code will look like this,

s3/main.tf

resource "aws_s3_bucket" "test-bucket-1" {

bucket = "test-bucket-1-${var.environment}-${var.aws_region}"

acl = "private"

}

resource "aws_s3_bucket" "test-bucket-2" {

bucket = "test-bucket-2-${var.environment}-${var.aws_region}"

acl = "private"

}

resource "aws_s3_bucket" "test-bucket-3" {

bucket = "test-bucket-3-${var.environment}-${var.aws_region}"

acl = "private"

}In the above code aws_s3_bucketwill create 3 S3 bucket’s in the selected region via terraform

s3/variables.tf

variable "environment" {

type = string

description = "This holds environment name. Example: qa, stage, prod"

}

variable "aws_region" {

type = string

description = "This is the region we use in AWS"

default = "eu-west-1"

}The values for aws_region will be passed from s3.tfvars file.

config/qa/s3/s3.tfvars

environment="qa"config/prod/s3/s3.tfvars

environment="prod"Similarly, we will also have provider.tfand backend.tf along with the main and variables file

In the above code, three S3 buckets will be created per environment in eu-west-1 region. In QA and Prod environment the following buckets will be created,

QA:

test-bucket-1-qa-eu-west-1

test-bucket-2-qa-eu-west-1

test-bucket-3-qa-eu-west-1

Prod:

test-bucket-1-prod-eu-west-1

test-bucket-2-prod-eu-west-1

test-bucket-3-prod-eu-west-1The code structure will look like this,

When deploying code in QA environment variables from config/qa/vpc will be used and similarly for PROD from config/prod/vpc .

Terraform Modules

A module is a container for multiple resources that are used together. Modules can be used to create lightweight abstractions, so that you can describe your infrastructure in terms of its architecture, rather than directly in terms of physical objects — Terraform Documentation

We will be using Terraform modules to create abstraction and reduce code duplication by moving all common resources to a common terraform module and import this common module to reduce duplication.

Extending the above example to support Multi-Region/Multi Environment using Terraform Modules:

Now let’s assume we want to create test-bucket-1 and test-bucket-3 inus-west-1 region. Instead of duplicating the code, let’s create an abstraction with all the common code. In terraform, Folders are considered as a module, let’s create a module called common under S3 folder and move all the common code under it. So the main.tf file in common will have the following code.

s3/common/main.tf

resource "aws_s3_bucket" "test-bucket-1" {

bucket = "test-bucket-1-${var.environment}-${var.aws_region}"

acl = "private"

}

resource "aws_s3_bucket" "test-bucket-3" {

bucket = "test-bucket-3-${var.environment}-${var.aws_region}"

acl = "private"

}Since our requirement was just to create test-bucket-1 and test-bucket-3 in us-west-1 region we have abstracted the common code under the common module

similarly, add the variables.tf file under the common module.

Now let’s create us-west-1 module under S3 folder, the main file in the newly created module will import the code from common module

s3/us-west-1/main.tf

module "common"{

source = "../common"

environment = var.environment

aws_region = var.aws_region

}s3/us-west-1/variables.tf

variable "aws_region" {

type = string

description = "This is the region we use in AWS"

default = "us-west-1"

}

variable "environment" {

type = string

description = "This holds environment name.Example: qa,stage,prod. Environment value will be passed from tfvars file"

}Code for us-west-1 is now ready, we can run terraform apply from s3/us-west-1 directory

terraform apply --var-file "config/$REGION/$ENV/s3.tfvars"

--Pass REGION and ENV environment variables before running applyNow we need to place the code for test-bucket-2 in a place such that the code will be used only when running terraform for eu-west-1 . So lets a create a new module called eu-west-1 similar to us-west-1 . In the main file, we will be importing the code from the common module and will be creating the test-bucket-2 resource under it.

s3/us-west-1/main.tf

module "common"{

source = "../common"

environment = var.environment

aws_region="us-west-1"

}

resource "aws_s3_bucket" "test-bucket-2" {

bucket = "test-bucket-2-${var.environment}-${var.aws_region}"

acl = "private"

}Now when we run terraform apply, along with test-bucket-1 and test-bucket-3 , test-bucket-2 will also be created in eu-west-1

Add a variable file similar to s3/us-west-1/variables.tf in this module, here the aws_region variables default value will be eu-west-1

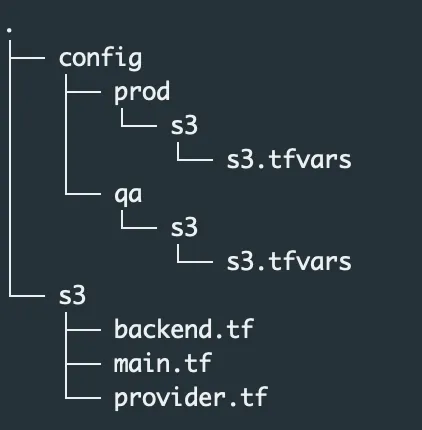

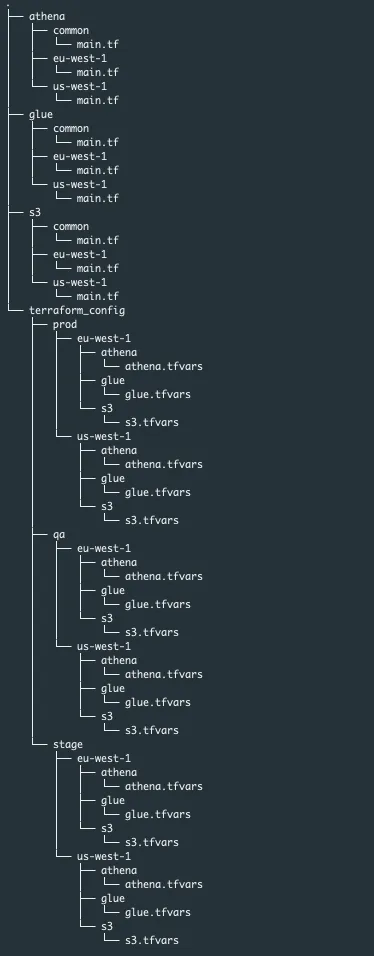

After abstracting the code, the overall code structure will look like this

In the future, in case we have a new requirement to create resources that are specific to a region we can add those in the corresponding module, if the requirement is common for all the regions then we can add the resources to common module.

Now let’s have a look at the final structure of the code after migrating the Naive approach code to support both multi-region and multi-environment

Now that we have created a DRY code structure that supports both multiple region and multiple environments, this code is Readable, Maintainable, and Reusable