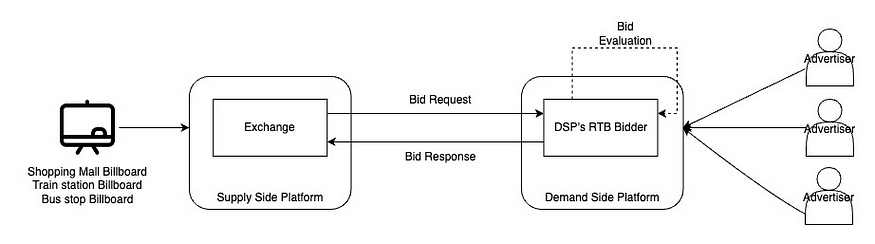

We work with Talon Outdoor in the out-of-home advertising (OOH) industry that utilizes billboards, transit stations, shopping centres and other similar places to deliver brand messages to a wide audience in public places. As part of building applications that allow Talon to buy out-of-home media, we built a demand-side platform (DSP) that helps them programmatically purchase digital out-of-home (DOOH) inventory which consists of digital screens placed in public places. A DSP provides a centralized interface for targeting specific audiences and optimizing ad placements based on data-driven insights. The DSP buys inventory in real-time using a mechanism known as real-time bidding (RTB).

One of the key components involved in building a DSP involved building an RTB bidder that participates in the real-time auction process to bid on ad impressions on behalf of advertisers. The bidder matches the bid requests raised by the SSP for ad slots on DOOH screens to one of the multiple advertisers’ campaigns and bids at the right price to make sure the campaigns are delivered successfully. This has to be done while handling a volume of 15k requests per second with a response time of 200–300 milliseconds.

The request-response between the SSP and the DSP typically follows these steps:

- The SSP sends a bid request to the DSP for each available ad slot on a digital screen.

- The bid request includes information about the available digital screen/billboard, such as a unique identifier, geolocation, available impressions and ad creative specifications.

- The bidder receives the bid request and evaluates the information in the request to determine whether it wants to respond. The bidder considers factors such as advertiser preferences, budget constraints, target audience and creative specifications. The bidder also needs to choose one among the multiple advertisers that are viable to respond.

- Once the bidder has determined which advertiser to respond with, it generates a bid response. The bid response includes the bid price, the creative details and callback URLs that will be used by the SSP to notify about the win/loss/billing of the bid response.

- The bidder sends the generated response back to the SSP.

Engineering Challenge

The entire process described above happens in milliseconds, allowing for real-time decision-making and ad serving. The RTB bidder plays a critical role in this process by evaluating bid requests, generating bid responses and competing in the auction to secure valuable ad impressions on behalf of advertisers.

The bidder must respond to a large number of bid requests (ranging from a few hundred to 15,000 per second) within 200–300 milliseconds per request. Typically, traffic is high at the beginning of each hour and tapers off as the hour progresses. This is the pattern we have observed for the DOOH bid requests. For online advertising, the pattern is different.

The RTB bidder must be highly scalable and efficient to handle the high volume of requests. It must also be able to make quick decisions based on the information in the bid request. The bidder must also be able to compete in the auction with other bidders to secure the most valuable ad impressions.

Technology choices

After careful consideration, we chose Java as the programming language for this project; given that the rest of the application was written in it, it was the best fit. Moreover, as our team was proficient in the language, we got a significant head start.

The next step was to choose a framework. We had previously used Akka-HTTP for Java, but we found it to be verbose and difficult to work with. We decided to evaluate Spring Reactive and Vert.x. To make an informed decision, we conducted benchmark tests using a lightweight bidder implementation. On the developer machine, the Vertx-based approach yielded 12k req/s and spring reactive yielded 9K req/s. Ultimately, we selected Vert.x as it exhibited superior performance, a lightweight nature, faster boot times, and a more straightforward configuration process compared to Spring Boot, which relied on more intricate configuration mechanisms.

Solution

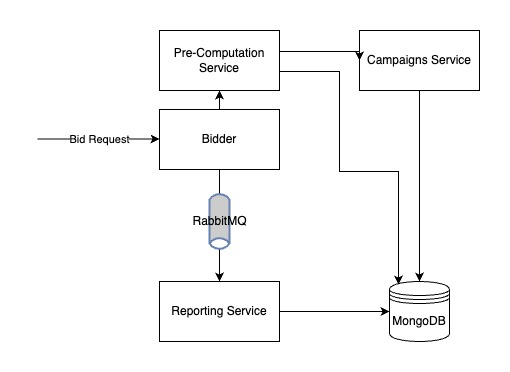

Since the response to each bid request has to be in milliseconds, we could not afford to make any external calls to a different service or database to fetch the data. Therefore, we decided to write a different micro-service that would pre-compute all the necessary data so that the bidder did not have to make any computations. At a high level, the pre-computation involved:

- Determining what advertisers’ campaigns are currently live.

- Determining the budget for each campaign.

- Determining which digital screen/billboard is appropriate for each campaign based on campaign parameters like location, audience, etc.

- Determining the creative to be used for each advertiser and each screen.

The bidder fetched the pre-computed data at fixed intervals and stored it in memory. Storing it in the JVM heap memory allowed the bidder to rapidly assess the bid request using the pre-computed information and provide a response if it met the criteria of a specific advertiser, had an available budget, and matched the advertiser’s available creative.

Once the response was generated, we needed to store this response along with the bid request and also send the response back to the SSP. To avoid the latency in storing the bid response, we decided to use a message broker RabbitMQ. The bidder puts a message containing the request and response in a queue. A different service reads this message and stores it in the database. The Vert.x feature of using worker threads for performing blocking operations like putting messages in a message broker allowed us to quickly turn around bid requests while letting the data be stored asynchronously.

Infrastructure

To maintain consistency with the current infrastructure, we continued utilizing the existing setup, which includes a Kubernetes cluster on AWS EC2 instances. Furthermore, we made use of the already-existing components, including RabbitMQ as the message broker and MongoDB as the database. The services responsible for pre-computing data and storing bid data in the database were developed using Java and Spring Boot.

The one change we made in our cluster just for the bidder was to deploy it with the service as LoadBalancer instead of ClusterIP. Along with this, we created a separate ELB for the bidders to avoid internal traffic routing within the cluster. This allowed us to connect the ELB directly to the bidder pods which reduced the latency by a few milliseconds per request.

Wrap up

By combining technology and networking optimizations with process optimizations, such as pre-computing all data ahead of time and caching it in memory, the bidder was able to handle a significant load. With a single pod and resource allocation of 0.5–1 CPU and 500–750MB memory, the bidder was able to respond to about 2000 requests per second with a response time of about 60ms.

In the next blog, I will document the challenges we faced when we integrated with a new SSP with a significantly higher bid traffic of 13000 requests per second.