How we handle failures changes drastically in the event-driven systems world. Unlike the immediate feedback loop with API-based systems, producers fire off a message and assume things will work fine. This is not just technical; it’s also about trust and reliability.

When I was working on an e-commerce application, it really taught me the importance of failure handling in event-driven systems. It became more apparent when we hit peak sales campaigns. The volume of events coming in really tested the system, and I started to see why we needed to be so prepared.

Sales campaigns used to happen twice a year, and it was an event spanning a couple of days. During these times we would normally hit 1M orders, which would be a load spanning over a month during normal days. This period was very important from a business perspective as well. We needed things to go really smoothly to maximise profits.

It was during this intense period that I truly understood the essence of failure handling in event-driven architectures. Initially, we had basic error handling, but loads of events during the sale tested everything we thought we knew, exposing weaknesses we hadn’t even considered.

The Shift: From Instant Feedback to Asynchronous Uncertainty

After calling an HTTP API, if it fails, we know right away. Errors are immediate/easily visible, and retries are often managed on the frontend app or user’s screen. But in event-driven systems, it’s a “fire and forget” situation. Once a message is sent, it’s the consumer’s responsibility to handle it successfully. This asynchronous nature makes observability incredibly important. Unlike a clear API call chain, debugging here often means digging through past events stuck in a Dead Letter Queue (DLQ). It’s a different beast that could be easily underestimated or sometimes not even considered.

Classifying Failures:

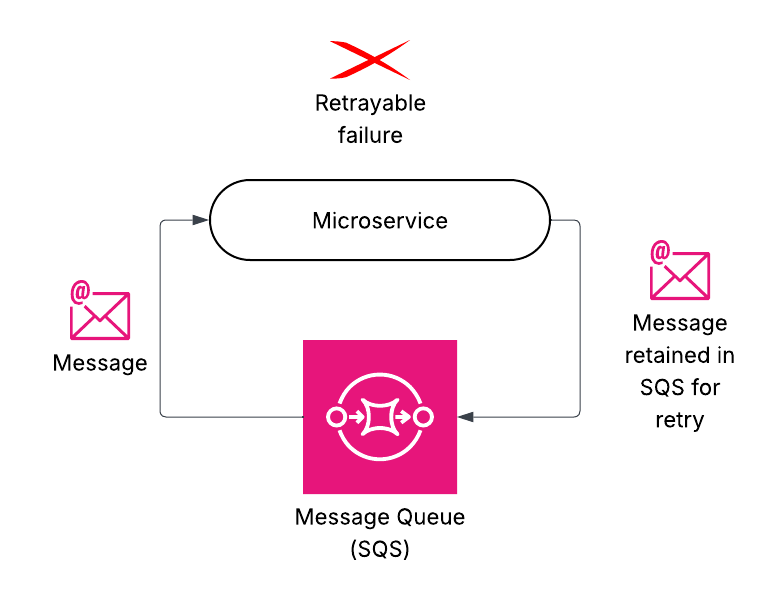

Our first step was to truly understand what could go wrong. Not all failures are the same—some would be transient and simply go through when retried. Fatal issues would generally not pass on retries and we need alternate strategies such as alerting or moving messages to a dead-letter queue. Fatal issues would need to be checked manually.

Transient failures: Our Experience

Initially, our message flow seemed fine. We had some basic retry logic in place and also configured retries in SQS. But we quickly learned that “fine” was relative. When the traffic peaked, we were swamped with a tidal wave of “transient” issues—service slowdowns, rate limits, random glitches in downstream services. Each new problem uncovered a new edge case, forcing us to constantly patch our code.

Having multiple downstream systems added even more complexity. Each downstream system had some specific edge cases to be handled. Also, being a backend-only service, our API calls were frequently throttled to ensure that end-user experience remained unaffected.

What saved us was building our own replay mechanism (more on that in a bit.) We also created a central spot to mark messages for retries; fixing things became a matter of minutes. And thanks to AWS SQS’ built-in retry features, with exponential backoff, we managed to keep things afloat.

As we learned more about how downstream systems behaved, the issues went down over time. The key takeaway? Anticipate problems when the pressure is on and build tools to recover gracefully.

In certain scenarios, some messages may still continue to fail even after several retry attempts. To prevent message loss in such cases, we routed messages to DLQ. These would generally happen if downstream systems are down for a long time.

Fatal failures: When Retrying Doesn’t Help

Then there were the fatal failures. No amount of retries would fix these messages. We faced issues with business logic violations e.g. missing data or an invalid product ID. We routed these messages to a DLQ for investigation without any retries. This ensures that your operations team can respond to meaningful issues quickly.

Sample Terraform Config for AWS SQS:

SQS redrive policy helped us a lot with transient failures.

provider "aws" {

region = "us-east-1"

}

# 1. Create the Dead Letter Queue

resource "aws_sqs_queue" "dlq" {

name = "my-app-dlq"

}

# 2. Create the Main Queue and attach DLQ via redrive_policy

resource "aws_sqs_queue" "main_queue" {

name = "my-app-main-queue"

redrive_policy = jsonencode({

deadLetterTargetArn = aws_sqs_queue.dlq.arn

maxReceiveCount = 5 # After 5 failed receives, message goes to DLQ

})

visibility_timeout_seconds = 30

}How to Handle Messages from the DLQ?

Finding the underlying cause was crucial when handling DLQ messages. We had to figure out if the problem was in our service or a dependency. In order to correctly process the message, the ultimate objective is to fix the underlying problem. Then the message was replayed into the system for processing.

That’s where our replay framework came in. We built an internal API that let us selectively replay messages based on failure reason or even specific message ID, like so:

/replay?dlq=dlq-name&originalQueue=original-queue-name&failureReason=ValidationError

/replay?dlq=dlq-name&originalQueue=original-queue-name&messageId=message-id-1

Additional Tip: Establishing traceability and observability around failures is critical. Capturing the failure reason directly within the DLQ message payload made our analysis significantly easier. This approach reduced reliance on sifting through logs and accelerated debugging. The failure reason could also potentially be stored as part of some persistent storage.

Time-critical Messages

We were extra cautious with messages that have direct customer impact. Replaying stale or failed messages could confuse and upset customers. Imagine getting two delivery notifications for the same package a day apart! We had conversations with stakeholders about the impact as during the sales season the impact was going to be on a higher side.

It’s crucial to assess the business impact prior to replaying customer-facing messages from a DLQ. Customer trust may suffer as a result of these circumstances. If working at higher message volumes where the impact is greater, it is ideal to discuss such edge scenarios beforehand with stakeholders.

Help Thy Neighbour

If your service emits outgoing messages to downstream systems as part of event processing, it’s a good practice to persist those outbound events before dispatching them. This creates a reliable audit trail of processed events, which was really helpful while debugging issues.

Moreover, the earlier-mentioned replay mechanism can be extended to support replaying specific outbound events—particularly useful when a downstream system requests a resend due to a failure on their side. Having this capability can come in handy at times and minimise recovery overhead.

Wrapping It Up: Building True Resilience

Effective error handling in event-driven systems isn’t just about writing error messages. It’s about thinking ahead, building mechanisms for retries and recovery. It’s about designing for failure from the start. This journey taught me more than just technical lessons; it taught about preparedness, resilience, and the importance of good communication.

Thank you for reading. I hope it helps you in your own adventures with event-driven systems.