What is Data Quality w.r.t. Machine Learning?

Data quality refers to the degree to which a dataset meets the following key requirements for reliable machine learning:

- Accuracy: The correctness and precision of data values in representing real-world facts

- Example: Temperature readings from sensors match actual temperatures within acceptable margins. Temperature in Kashmir in January cannot read 30 degrees celsius.

- Completeness: The presence of all necessary data points and features

- Example: No missing values in crucial fields.

- Consistency: The uniformity of data across the dataset

- Example: Consistent date formats, units of measurement, and naming conventions

- Validity: The conformance to defined rules, formats, and value ranges

- Example: Ages and time being positive numbers, email addresses following correct format

- Data relevance: The relevance of data with respect to time

- Example: Training data that reflects current patterns and relationships

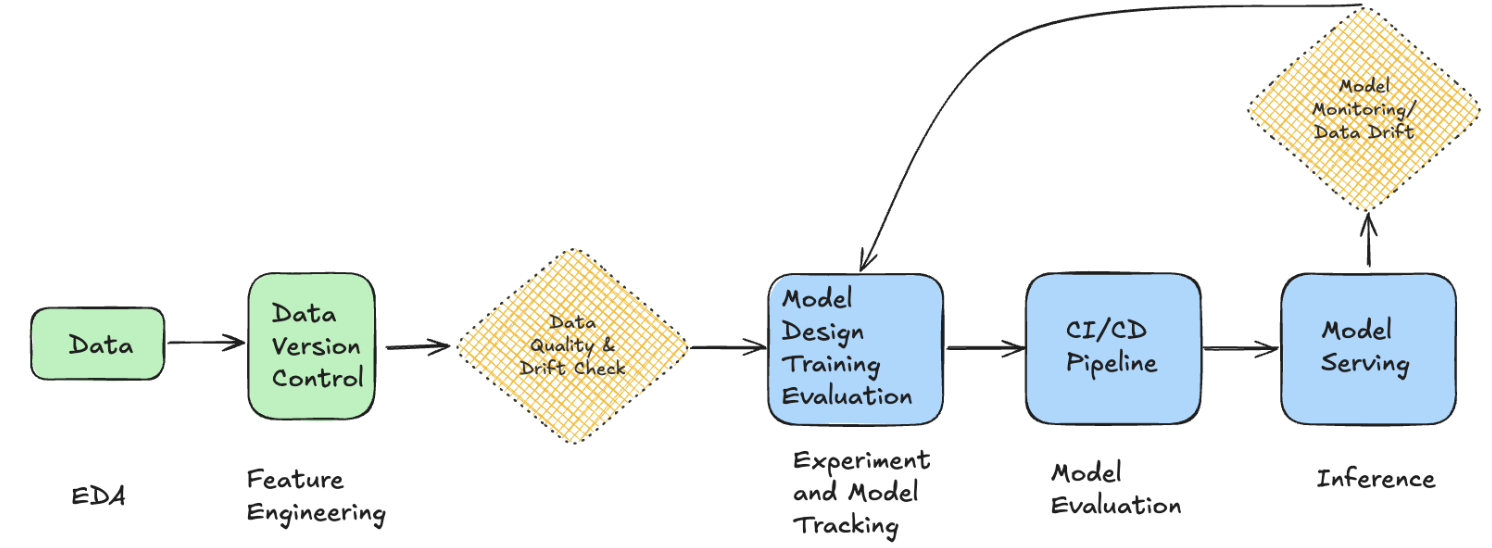

Where does Data Quality fit into the ML pipeline?

Cost of Poor Data Quality

Poor data quality in any of these contexts can lead to:

- Unreliable model predictions: Increased false positives rates in case of fraudulent transactions and therefore loss of stakeholder trust.

- Degraded business outcomes

Data Quality in Different ML Pipeline Stages

Ensuring data quality is a continuous process across:

- Training and Validation

- Production Monitoring

In this article we will focus on handling the data quality during training and validation

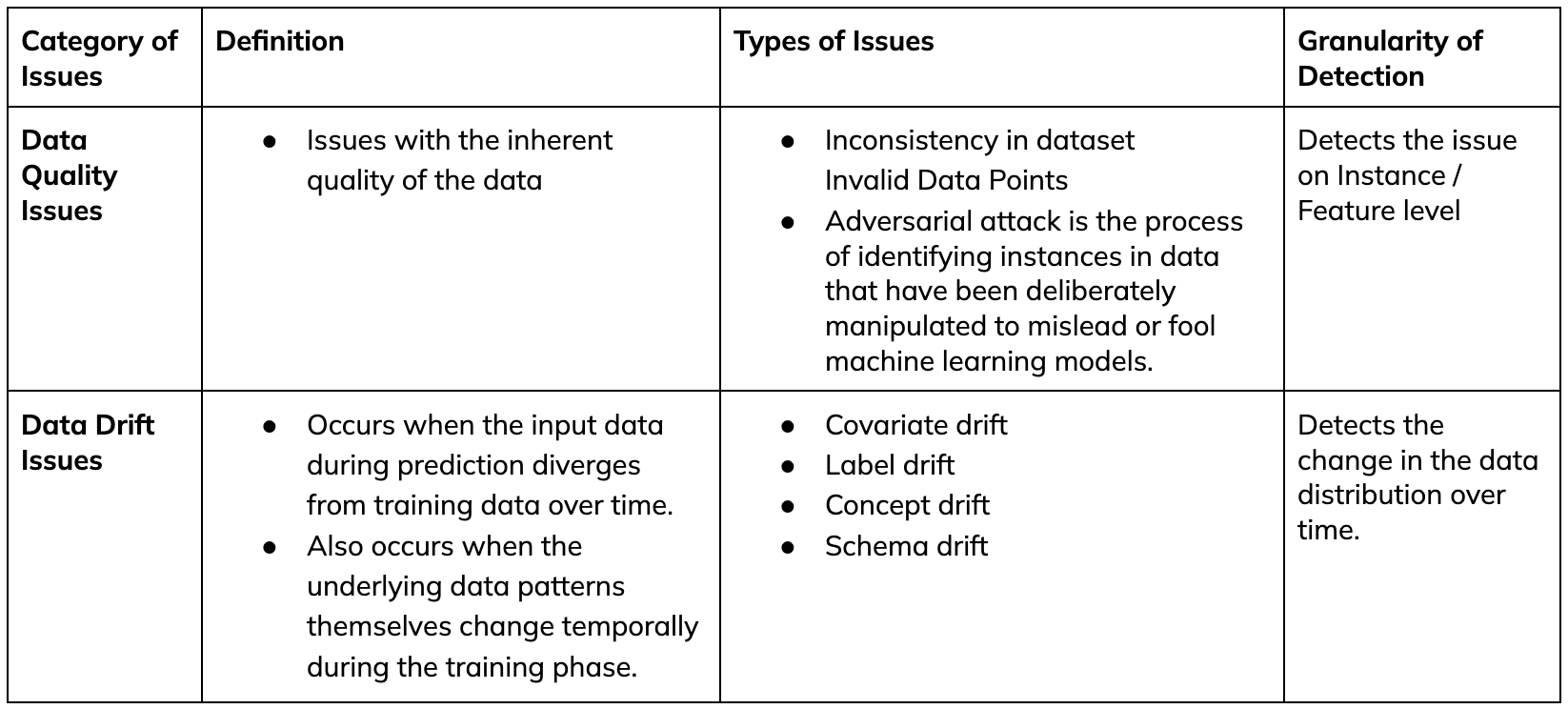

Data Quality issues in ML Training Pipelines

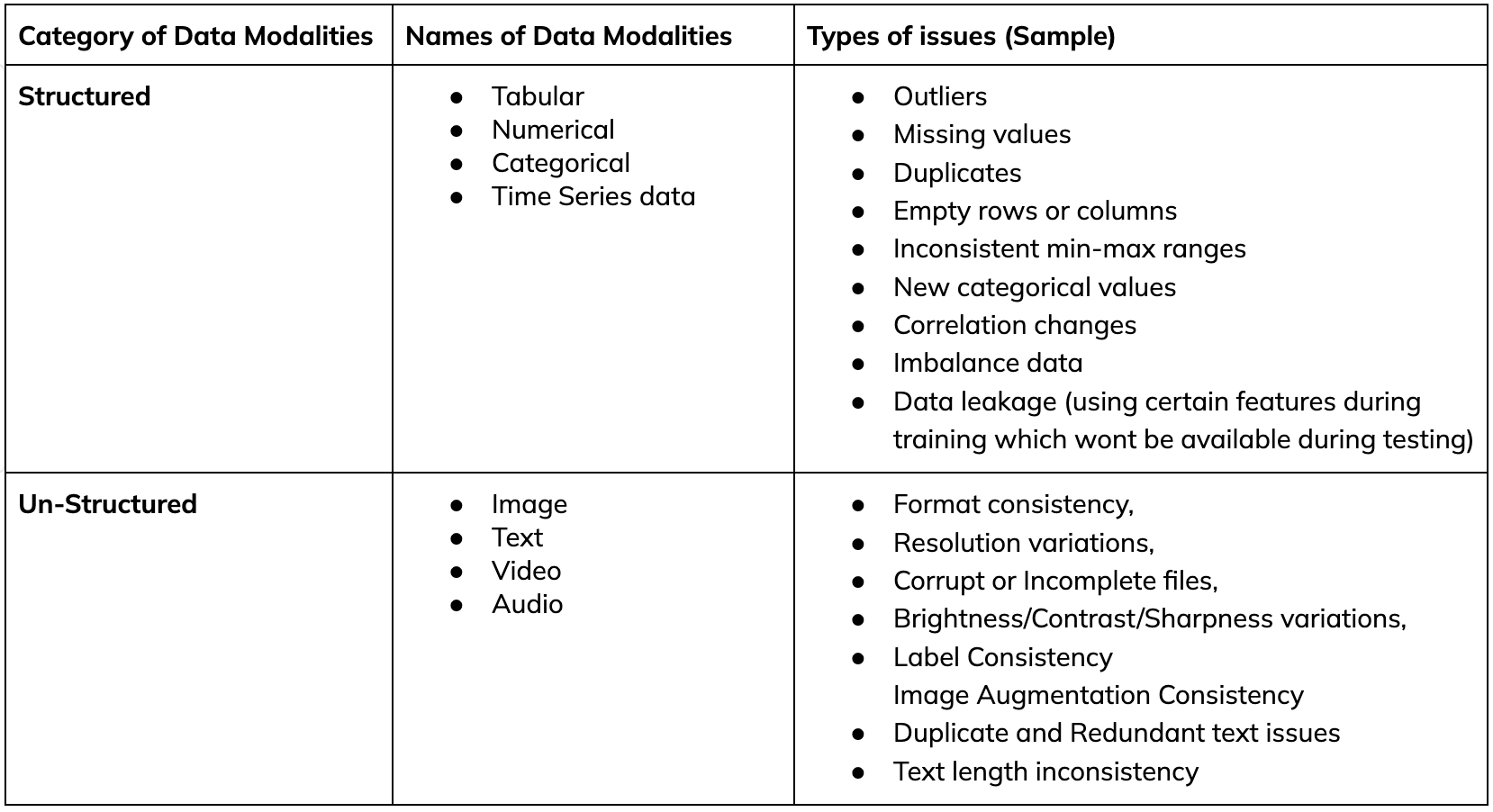

Data Modality and Issues

Detecting Issues

Basic Statistical methods for data quality issues:

- Distribution analysis (mean, median, standard deviation)

- Value ranges (min/max, missing value detection)

- Functions to test for duplicates, cardinality, timer

Advanced Statistical methods for data drift issues:

Drift detection in ML relies on statistical methods, primarily using hypothesis testing to determine whether drift has occurred. The choice of detection algorithm depends on the data type and the type of drift being analyzed (e.g., outliers, adversarial examples, concept drift, or covariate shift).

Key Aspects of Drift Detection:

- Types of Tests:

- Univariate Tests: Analyze drift in individual features.

- Multivariate Tests: Detect drift across multiple features simultaneously.

- Two-Sample Testing: Compares new data against a reference dataset (e.g., comparing training vs. test data).

- Popular Statistical Methods:

- Kolmogorov-Smirnov Test (for continuous distributions)

- Maximum Mean Discrepancy (MMD) (for kernel-based distance measurement)

- Chi-Squared Test (for categorical data)

- Dimensionality Reduction: Since most datasets are high-dimensional, techniques like PCA or t-SNE are often applied before drift detection.

- Alibi provides a comprehensive overview of drift detection algorithms, categorized by task and data type, making it a valuable resource for implementing these methods.

Data Quality and Drift Detection Tools

Expected capabilities of a Tool

- Problem Identification / Detection: The system should effectively detect anomalies, inconsistencies, and drift to ensure data reliability.

- Handling Large Datasets Efficiently – Large batch sizes should be managed using caching mechanisms or parallel processing to optimize performance.

- Comprehensive Reports and Visualization: Reports should include root cause analysis, impact assessment, historical comparisons, and trend analysis for better insights.

Tools

- Evidently: Specializes in structured/tabular data validation, offering ready-to-use dashboards for drift detection and monitoring

- Alibi: Provides a diverse set of detection methods (specifically for drift detection) for different data types, including tabular, text, and image data.

- Great Expectations: Focuses on data validation by defining and enforcing data quality expectations within ML pipelines.

Handling Poor Data Quality

Fix Quality Issues When

- Data is expensive to recollect: When acquiring new data is costly, improving the quality of existing data is more practical.

- Time constraints prevent new data collection: When deadlines are tight, refining the current dataset ensures timely progress.

- Fixes include:

- Missing value imputation: Filling in missing values using statistical methods or predictive models to maintain dataset integrity.

- Outlier removal: Detecting and removing extreme values that could skew analysis and affect model performance.

- Feature engineering: Creating new features or transforming existing ones to enhance predictive power and model accuracy.

Discard and Recollect Data When:

- Data is biased, outdated, or flawed: If the dataset is unrepresentative or contains critical errors, new data collection is necessary.

- Fixing issues is impractical: When data quality problems are too severe to correct effectively, starting fresh is the best option.

- Consider cost and feasibility before recollecting: Assess whether recollection efforts justify the expense and time investment before proceeding.

Conclusion

This document outlines the theoretical aspects of data quality in ML pipelines. The practical guide will focus on implementing these principles using DVC.