Modern applications frequently rely on atomic operations, with atomic counters being one of the most common use cases. For example, when multiple threads execute in parallel, increment and decrement operations must be atomic to prevent race conditions. For this discussion, I’ll be using Go as the reference language, but the core concepts apply to other programming languages as well.

Recap: Handling Race Conditions in Go

This section provides a refresher on managing race conditions in Go. If you’re already familiar with this topic, feel free to skip ahead to learn about atomic operations under the hood, its functionality, and how it compares to mutexes.

In Go, race conditions can be handled using several approaches, including the sync/atomic package, mutexes, etc. To illustrate, we’ll launch 10,000 goroutines, each incrementing a shared counter 100 times.

Using

sync/atomicpackage

package main

import (

"fmt"

"sync"

"sync/atomic"

"time"

)

func main() {

start := time.Now()

var counter int32

var wg sync.WaitGroup

num_go_routines := 10000

wg.Add(num_go_routines)

for i := 0; i < num_go_routines; i++ {

go func() {

defer wg.Done()

for j := 0; j < 100; j++ {

atomic.AddInt32(&counter, 1)

}

}()

}

wg.Wait()

if counter != 1_000_000 {

panic("Wrong!")

}

fmt.Println("Time taken:", time.Since(start).Seconds())

}Output:

❯ go run -race atomic_playground/main.go

Time taken: 0.269122875In the above code, we use atomic.AddInt32 rather than counter++. This is where all the magic happens. We will be diving deeper into how atomicity is achieved in the below sections.

2. Using Mutexes

package main

import (

"fmt"

"sync"

"time"

)

func main() {

start := time.Now()

var mutex sync.Mutex

var counter int32

var wg sync.WaitGroup

num_go_routines := 10000

wg.Add(num_go_routines)

for i := 0; i < num_go_routines; i++ {

go func() {

defer wg.Done()

for j := 0; j < 100; j++ {

mutex.Lock()

counter++

mutex.Unlock()

}

}()

}

wg.Wait()

if counter != 1_000_000 {

panic("Wrong!")

}

fmt.Println("Time taken:", time.Since(start).Seconds())

}Output:

❯ go run -race mutex_playground/main.go

Time taken: 1.226746084You may have encountered this code before, and the code should be fairly self-explanatory. We use a mutex lock just before incrementing the counter and then release the lock.

Additionally, the mutex-based approach takes significantly longer compared to the atomic version. I’ll dive deeper into this in the upcoming sections.

Sync/Atomic: What happens under the hood

The sync/atomic package achieves atomic operations by leveraging hardware and CPU-level synchronisation.

What does this mean?

To understand this better, let’s examine the source code of these functions.

Interestingly, the implementation of atomic.AddInt32 in sync/atomic has an empty function body. This is because it relies on explicitly written assembly code located within the same package.

Go allows linking assembly files during compilation, enabling functions to have low-level implementations written in assembly.

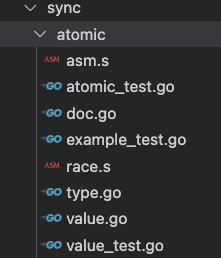

Below is a snapshot of the sync/atomic folder:

Files in sync/atomic package

The assembly code for atomic.AddInt32 is in the asm.s assembly file, as below:

TEXT ·AddInt32(SB),NOSPLIT,$0

JMP runtime/internal/atomic·Xadd(SB)We notice a jump instruction to another function inside the runtime/internal/atomic package.

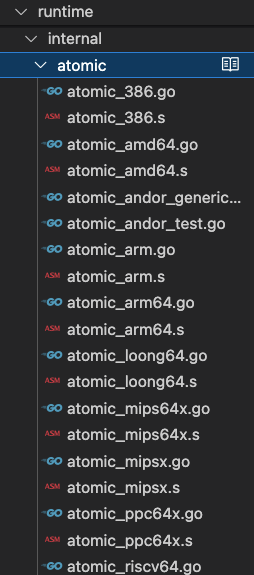

Files in runtime/internal/atomic

The runtime/internal/atomic package contains multiple assembly files for each computer architecture. Let’s look at atomic_amd64.s file for the Xadd function.

// uint32 Xadd(uint32 volatile *val, int32 delta)

// Atomically:

// *val += delta;

// return *val;

TEXT ·Xadd(SB), NOSPLIT, $0-20

MOVQ ptr+0(FP), BX

MOVL delta+8(FP), AX

MOVL AX, CX

LOCK

XADDL AX, 0(BX)

ADDL CX, AX

MOVL AX, ret+16(FP)

RETNote: Thanks to feedback from reddit, in Go, the function sync/atomic/addInt is implemented as compiler intrinsics rather than regular function calls. However, at the hardware level, these operations still translate to assembly instructions like LOCK XADD, ensuring atomicity. The compiled code can be seen here https://godbolt.org/z/Ezc7hdrTa

For those unfamiliar with assembly, this might seem intimidating, but let’s focus on the important bits.

The crucial instruction here is the LOCK XADDQ AX, 0(BX) (the LOCK instruction is a prefix for the following line).

The

LOCKprefix ensures that the CPU has exclusive ownership of the appropriate cache line for the duration of the operation, and provides certain additional ordering guarantees. This may be achieved by asserting a bus lock, but the CPU will avoid this where possible. If the bus is locked then it is only for the duration of the locked instruction.

Source: Stackoverflow

In simpler terms

An atomic operation is one that cannot be interrupted. The LOCK prefix in assembly ensures that a given instruction is executed atomically, meaning no other processor or core can modify the memory location until the operation is complete.

The exact implementation of LOCK varies by CPU architecture, but the fundamental purpose is the same.

At first glance, this behavior seems somewhat similar to an application level mutex — where only one thread executes a critical section while others wait. However, the key difference is in performance:

Mutexes rely on OS-level context switching, introducing significant overhead.

Atomic operations use CPU instructions, completing within a few cycles, making them much faster.

Next, we’ll compare atomics and mutexes in terms of performance and understand why this difference matters.

Atomic vs Mutex

The assembly code for atomic operations shows that they operate at the hardware/CPU level, whereas mutexes lock resources at the application level. This fundamental difference has a significant impact on performance.

Atomic operations leverage specialised CPU instructions (such as LOCK XADD), allowing them to execute without blocking threads or requiring context switching. Simple atomic reads and writes can take as little as a few cycles, while atomic Read-Modify-Write (RMW) operations like atomic.AddInt64 in go can take 10–100+ cycles, depending on cache locality and contention.

Mutex-based synchronisation, on the other hand, involves OS-level synchronisation, where threads must wait, be scheduled, and undergo context switching, adding significant overhead which can range from tens to thousands of CPU cycles. If contention is low, a sync.Mutex may be acquired within hundreds of cycles. However, in high-contention scenarios, this can increase latency dramatically, often costing 10,000+ cycles per context switch and significantly impacting performance.

Context switching itself takes at least 100ns (~300 cycles on a 3GHz CPU) in ideal conditions (100ns is an optimistic estimate), but more typically thousands of cycles.

This makes atomic operations the preferred choice when minimal synchronisation is needed, whereas mutexes are necessary for more complex scenarios involving multiple operations that must be performed as a unit.

Conclusion: Atomicity Comes at a Cost

Whether you use sync/atomic or a mutex, there is always some overhead. However, the cost is significantly higher for mutexes compared to atomic operations. Even with atomics, there is still some locking at the cache line level, preventing other CPUs from accessing it simultaneously. However, this overhead is minimal compared to the performance impact of mutexes.