Introduction

In recent years, generative AI has transformed the way we think about building intelligent systems. With models like GPT, Claude, and DALL·E, we have gained tools that can understand, create, and reason in ways that once seemed out of reach. These models have opened the door to powerful new capabilities; from natural language understanding to dynamic content generation. However, as adoption grows, there emerges a pattern of usage. We have started using massive, general-purpose models for tasks that don’t really need all that horsepower. This leads to bloated systems, increased latency, higher operational complexity and consequent compute bills. This is where Hybrid AI offers a more pragmatic alternative, combining the strengths of discriminative and generative models to build systems that are not only smart, but also robust, scalable and efficient. In this article, I will attempt to present a closer look at why hybrid architectures make sense, how they work, and how they can be used in real world applications. Let’s start with the basic building blocks!

Discriminative AI: Targeted, Efficient, and Predictive

Discriminative AI models are designed to distinguish between different classes or patterns in data. They are intended to make specific predictions or mappings based on given input features. Classic examples include logistic regression, decision trees, Support Vector Machines (SVMs), and neural network classifiers. These models are widely used for tasks like spam detection, sentiment analysis, fraud detection, and image classification. The primary advantage of discriminative models li es in their simplicity, speed, and precision. They are typically lightweight, fast to train and infer with, and require fewer computational resources. They work especially well in environments where decisions are structured and repeatable. Moreover, they focus solely on the boundaries between classes, and hence, they often outperform generative models on supervised classification tasks in terms of accuracy and latency. However, their major limitation is inflexibility. Discriminative models can’t generate new data or handle ambiguous, open-ended tasks well. They can’t adapt to dynamic environments and perform reasoning-related tasks. They require structured input and become increasingly brittle when context or language nuances are involved. Their performance often drops if the feature engineering is poor or if they encounter out-of-distribution data.

Generative AI Systems: Creative, Generalized, and Versatile

Generative models, on the other hand, learn the underlying data distribution to generate new data instances. Large Language Models (LLMs) like GPT, DALL·E for image generation, and diffusion models for art or video synthesis are typical examples. These models can write essays, answer open-ended questions, generate synthetic images, simulate speech, and much more. The power of generative AI lies in its versatility and contextual understanding. Because these models are trained on vast and diverse datasets, they can generalize across domains, reason abstractly, and perform zero-shot or few-shot learning with ease. This makes them invaluable in creative tasks, conversational agents, code generation, and document summarization. But this power comes at a cost. Generative models are computationally expensive, slower to respond, and often overkill for routine tasks like binary classification, structured decision-making or filtering. Moreover, they can be prone to hallucination and require guardrails to ensure reliability and safety. Their outputs will be less deterministic and harder to verify, especially for critical decisions.

The Overkill Problem: Using a Sledgehammer for a Thumbtack

In the current AI landscape, there’s a growing trend of deploying large generative models for every task, even when the task is simple, repetitive, or easily solved using a discriminative approach. This is predominantly because of the ease of using it without spending efforts on data collection for specific tasks and training dedicated discriminative models. However, calling an LLM to perform a simpler task like classifying a support ticket as “urgent” or “non-urgent” introduces unnecessary latency and compute load when a logistic regression model would do the job just as well, if not better. This misallocation of AI resources leads to higher inference costs, reduced system responsiveness, and greater environmental impact. Additionally, relying solely on generative models for decision-making can be risky, as their outputs may lack consistency and transparency.

Hybrid AI: The Best of Both Worlds

This is where Hybrid AI, or composite AI, steps in. By integrating discriminative and generative models, we can get the best of both worlds. Discriminative models handle well-scoped, fast-executing tasks like filtering, ranking, or routing, while generative models are called upon for tasks that require reasoning, synthesis, or abstraction. For example, in a customer support pipeline, a discriminative model might classify and route incoming queries to the right department, while a generative model drafts context-aware responses for human consumption. This hybrid architecture ensures speed, reliability, and scalability, while keeping costs and complexity in check.

Real-world Use Case: A Conversational Customer Assistant

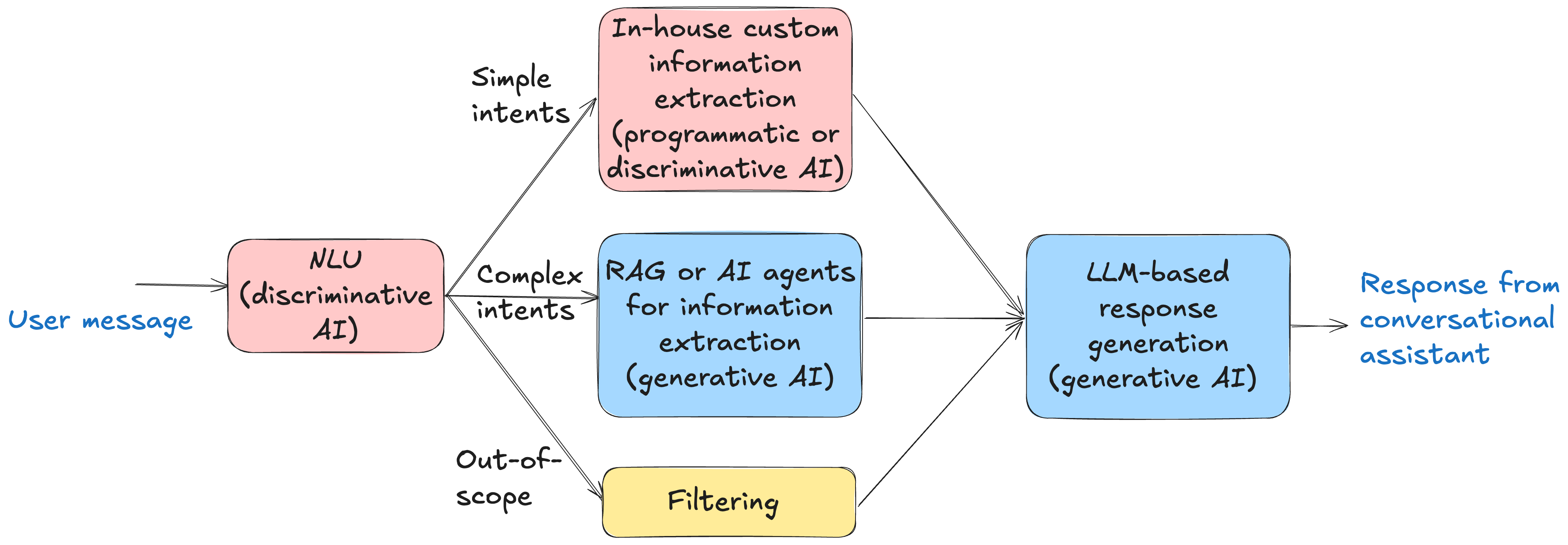

A customer assistant catering to users for a specific business, has a predefined scope of operation. Such an assistant is supposed to answer user queries related to products and services offered by the business, and is not supposed to answer generic questions. This is a very compelling use case for a hybrid AI system. We have developed such a system as part of Sahaj AI Labs. We designed the following schematic for a hybrid conversational AI assistant.

In this architecture, a discriminative Natural Language Understanding (NLU) component performs intent classification and entity extraction. The intent classifier is responsible for classifying the user messages into one among a predefined set of intents. This intent classifier is also responsible for filtering out-of-scope messages, with respect to the scope of operation of the business. This exercised a rigid control over the kind of user messages that the conversational assistant answers to. We ensured that malicious and hateful messages are never answered, protecting the brand reputation of the business. Once the intent of the user message is identified, together with relevant entities, in a discriminative AI mode, we design in-house custom response generators for simpler intents in a programmatic way. For example, if the user query is about the price of a product/service, the information related to that needs to be retrieved from a relevant database or by making an API call, and can be presented to the user. Such a response can be easily made without making an LLM call. On the other hand, if the user query belongs to a more complex intent, we may need to invoke LLMs either in the RAG framework or in the agentic framework. For example, if the user asks for the best subscription plan for a service, fitting specific needs, we invoke an LLM by passing required information to it from a RAG-style or agentic-fashion. Finally, an LLM call is made for writing responses in natural language, consuming information extracted from the enterprise database. By utilizing a combination of discriminative and generative AI, paired with multiple programmatic or rule-based modules, we were able to hit a balance between accuracy, latency, cost, and user satisfaction.

Conclusion

While the world is rapidly embracing generative AI for its ease of use and ability to bypass the need for task-specific data collection and model training, there are still scenarios where simpler solutions are more effective. Generative models offer convenience, but they often come with trade-offs in cost, latency, and infrastructure demands. In many cases, building small, focused models for simpler tasks can lead to more efficient and scalable systems. Striking the right balance between convenience and optimization is key to smart AI adoption. As AI systems continue to mature, it’s becoming clear that no single model type fits all problems. Generative AI has expanded the frontier of what’s possible, but to build systems that are truly robust, responsive, and cost-effective, we need to be more strategic. Hybrid AI, blending discriminative and generative approaches, offers a balanced architecture that maximizes impact while minimizing inefficiencies. By aligning the complexity of the model with the complexity of the task, teams can deliver smarter solutions at scale. The future of AI isn’t just bigger models; it’s better orchestration of the right tools for the right job.

References

Karthika Vijayan and Shruti Dhavalikar, “Combining Discriminative and Generative AI for Dedicated Conversational Assistants”, in Workshop on Composite AI at the 27th European Conference on Artificial Intelligence, Oct, 2024, Santiago de Compostela, Spain. https://filuta.ai/images/compai/CompAI_paper_10.pdf

Acknowledgments: I would like to thank Dr. Ravindra Babu T, Dr. Oshin Anand, and Shruti Dhavalikar for their time and effort in reviewing this article.