Introduction

In today’s world, where Retrieval-Augmented Generation (RAG) pipelines are increasingly integrated into applications, it’s more important than ever to thoroughly test these systems.

Large Language Models (LLMs) are powerful but not perfect. They can hallucinate—generating information that isn’t in the context—and sometimes provide answers that are irrelevant to the user’s query. These issues, or “smells,” can harm the reliability of your application, making it essential to thoroughly test your LLM before and after deployment.

As open-source LLMs continue to surge in popularity, distinguishing their strengths and weaknesses has become a nuanced challenge. With the rapid evolution of various open source models, the hunt for the most capable LLM—especially for your RAG application— has never been more intriguing.

Key Considerations for Evaluating RAG Pipelines

Before diving into the specifics of testing RAG pipelines, it’s crucial to understand what “success” looks like in this context. A well-functioning RAG pipeline isn’t just about retrieving relevant information—it’s about seamlessly combining retrieval with generation to produce outputs that are:

Factually Accurate: The generated response must reflect the retrieved information correctly without introducing hallucinations.

Contextually Relevant: Both the retrieval and generation components should align with the user’s query and intent.

Coherent and Readable: The output should be fluently written and logically structured, ensuring usability in real-world applications.

These success metrics highlight the complexity of RAG evaluation, which requires going beyond simplistic accuracy checks. And RAGAS is framework which helps us with evaluate our RAG applications.

Data Needed For Testing RAG Application

You need the following data to test your RAG application:

Ground truth/Reference/A right answer to the question

Answer generated by the LLM

Context retrieved by the retriever

Question/query by the user

Please note that while the terminology may vary across frameworks, the underlying principles remain consistent.

Metrics for Testing

Here are a few interesting metrics which RAG Testing frameworks provide to test your RAG pipeline:

Faithfulness: Faithfulness measures the factual consistency of the generated answer against the retrieved context.

(Is the answer generated by the LLM actually based on the context retrieved by the retriever?)Answer Relevancy / Response Relevancy: Response relevancy assesses how pertinent the generated answer is to the given prompt or question. (Is the answer generated by the LLM actually the answer to the question I asked?)

Context Relevancy / Context Precision: Context precision measures the proportion of relevant chunks in the retrieved context. (Are the chunks retrieved relevant to my query?)

Context Recall: Context recall evaluates how many of the relevant documents or pieces of information were successfully retrieved. (Were all the chunks relevant to the correct answer actually retrieved?)

Factual Correctness: Factual correctness measures how accurate the generated answer is when compared to the ground truth. (Is the answer generated by the LLM factually correct and aligned with the right answer?)

If you see the metrics which includes words like correct/right answer, it means it needs the ground truth to evaluate.

How to Generate the Ground Truth

Getting ground truth is a very crucial. I can suggest two ways to generate ground truth:

Human/SME: Anyone who has enough knowledge on the subject can provide a set of questions and answer which can be used as queries and ground truth for testing.

A better LLM: Leverage state-of-the-art models (e.g., OpenAI’s GPT-4) to generate reference answers. This is a trickier approach as it might not generate accurate responses.

Testing RAG Pipelines: Development vs. Production

When testing a RAG application in development, you have the advantage of access to ground truth data—a predefined set of correct answers or expected output for specific queries. This enables comprehensive evaluation across multiple of the above metrics.

You can set up a pipeline in the development phase to process queries, compare results against ground truth, and compute these metrics to provide a detailed picture of your RAG system’s performance. This iterative testing helps refine both the retrieval and generation components.

However, in a production environment, the situation is quite different:

Lack of Ground Truth: For real user queries, you generally do not have a pre-defined correct answer. This means metrics like factual correctness are not applicable.

Focus on User Experience: To evaluate whether the system is delivering a satisfactory experience, you rely on more subjective, context-driven metrics:

Answer Relevance: Does the generated response sufficiently address the user’s query? This can sometimes be inferred through implicit feedback, such as whether users continue engaging with the system or abandon it.

Context Relevance: Are the retrieved documents or context passages used to generate the answer relevant to the query?

By focusing on these indicators in production, you can ensure that your RAG application continues to provide meaningful and relevant responses, even in the absence of ground truth data.

There are plenty of frameworks for efficient RAG pipeline testing like RAGAS, DeepEval, Trulens, and much more. Let’s deep dive into the RAGAS Framework.

The RAGAS Framework: A Comprehensive Solution

The RAGAS framework is a comprehensive methodology designed to evaluate RAG pipelines. By breaking down RAG pipelines into measurable components, RAGAS provides a systematic way to evaluate performance and identify areas for improvement.

Source: RAGAS

Understanding Metrics in RAGAS: LLM-Based vs. Non-LLM-Based Approaches

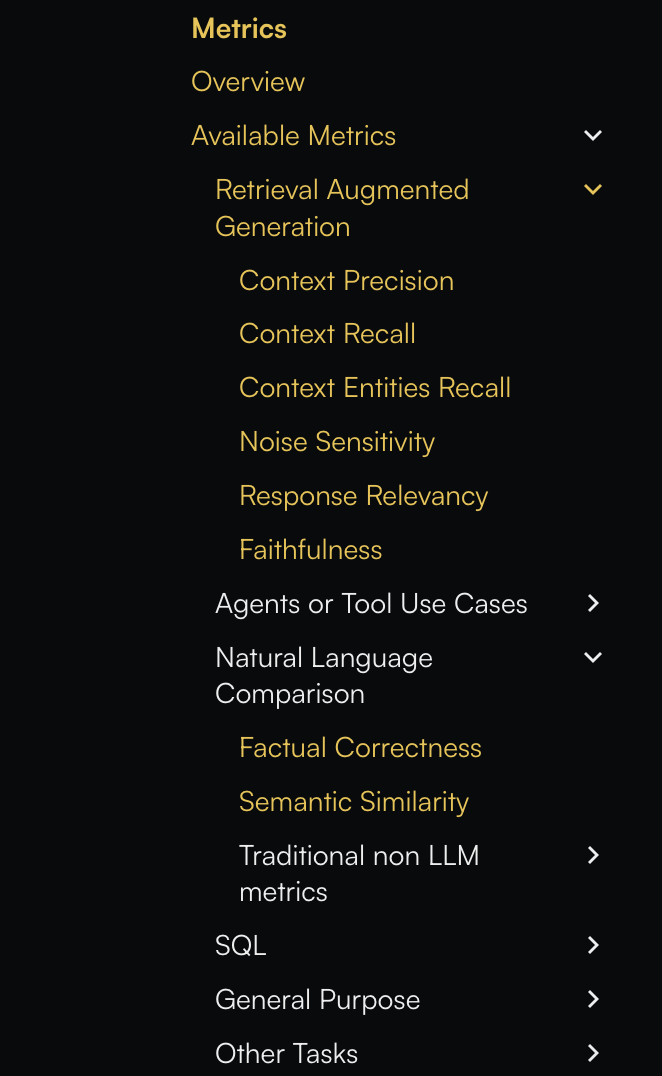

As evident from the above image, RAGAS provides a range of metrics to evaluate the effectiveness of responses. These metrics can be broadly categorised into LLM-based and Non-LLM-based approaches. Understanding the distinction between these categories is key to leveraging RAGAS effectively.

LLM-Based vs. Non-LLM-Based Metrics

The primary difference between LLM-based and Non-LLM-based metrics lies in their evaluation mechanism:

LLM-Based Metrics: These utilise a LLM to generate scores. They are particularly valuable for evaluating aspects like factual correctness, where nuanced understanding is required.

Non-LLM-Based Metrics: These rely on conventional methods, such as NLP string matching algorithms or statistical analysis, to assess performance. While simpler, they are often less effective for complex tasks like measuring factual accuracy.

For instance, when assessing factual correctness, we need the deep contextual understanding that only an LLM can provide. In such cases, the LLM acts as a critique LLM, evaluating responses based on its advanced reasoning capabilities.

Let’s explore how RAGAS applies both LLM-based and Non-LLM-based metrics using the example of context precision. Below is a small practical implementation of the metrics discussed above:

RAGAS version: “0.2.7”

from ragas import SingleTurnSample

from ragas.metrics import LLMContextPrecisionWithReference

from ragas.metrics import NonLLMContextPrecisionWithReference

context_precision_with_llm = LLMContextPrecisionWithReference()

context_precision_without_llm = NonLLMContextPrecisionWithReference()

sample = SingleTurnSample(

user_input="Where is the Eiffel Tower located?",

reference="The Eiffel Tower is located in Paris.",

retrieved_contexts=["The Eiffel Tower is located in Paris."],

)

context_precision_with_llm.single_turn_ascore(sample)

context_precision_without_llm.single_turn_ascore(sample)Choosing the Right Critique LLM

Selecting an appropriate critique LLM is crucial for achieving reliable results. A random or underperforming LLM cannot serve this purpose effectively. For the best outcomes, the critique LLM should be one of the top-performing models in the market.

Currently, the RAGAS framework supports OpenAI’s GPT-4 models as critique LLMs, offering state-of-the-art evaluation capabilities. By relying on GPT-4, RAGAS ensures that LLM-based metrics are both robust and accurate.

Conclusion

Testing RAG pipelines is a crucial step in building reliable and effective applications. With frameworks like RAGAS, developers can evaluate their systems comprehensively, addressing metrics such as factual correctness, context relevance, and answer faithfulness. While testing in development focuses on metrics driven by ground truth, production testing emphasises user experience and relevance.

The RAGAS framework’s combination of LLM-based and non-LLM-based metrics offers a well-rounded approach to evaluation, ensuring your application meets the high standards required for real-world use. By leveraging robust critique LLMs and iterative testing, you can refine your RAG pipeline to deliver accurate, relevant, and coherent responses—ensuring success in this rapidly evolving landscape.